OpenAI shows Microsoft the money.

- Microsoft’s latest deal with OpenAI is little more than Microsoft supporting what is probably becoming one of its biggest clients.

- Microsoft has extended its relationship with OpenAI beyond its original investment (see here) and the supercomputer that it announced in May 2020 to exclusively license OpenAI newest language algorithm, GPT-3.

- This means that access to GPT-3 will be offered by Microsoft as part of its Azure offering as well as integrated with the cloud services that are offered as part of its cloud computing service.

- Generative Pre-trained Transformer 3 (GPT-3) is a language model that uses an almost unimaginable 175bn parameters making it 116x bigger than its predecessor ensuring that it requires vast resources in order to run.

- I think that this is the main reason that Microsoft is all-in on OpenAI because the bigger and more complex the models get, the more Azure resources OpenAI consumes and the more money Microsoft makes.

- This is why it makes little difference to Microsoft whether OpenAI succeeds or fails as almost all of the money that Microsoft invested comes back to Microsoft in the form of revenues for Azure.

- GPT-3 is the latest embodiment of OpenAI’s philosophy which is that artificial general intelligence or transfer learning (AI’s holy grail) can be achieved by throwing as much data and compute that one can lay one’s hands on at the problem.

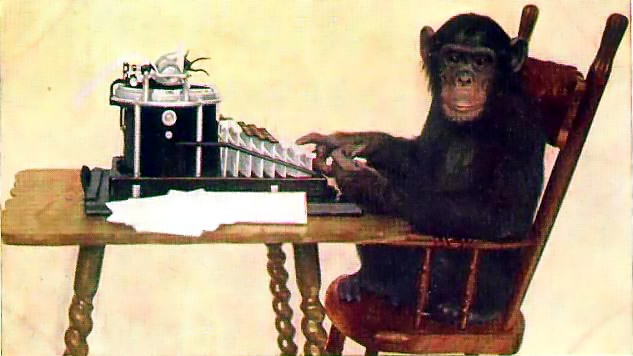

- RFM describes GPT-3 as an example of the infinite monkey theorem which states that given enough time a monkey with a typewriter will eventually come up with the works of William Shakespeare. (see here)

- GPT-3 produces some very impressive content that 48% of humans could tell whether the content had been written by a human or a machine.

- It also produces a lot of gibberish (which tends to get ignored) and OpenAI’s claims of generality for GPT-3 are not difficult to call into serious question using its own data (see here).

- However, this is exactly what it was created to do as the system was incentivised to produce text that reads plausibly regardless of whether it makes any sense.

- When one digs into what the machine is actually saying, it quickly becomes obvious that the system has no idea what it is doing.

- This is the flaw that is inherent to all deep learning-based systems and progress to fix this problem has been glacially slow.

- The other problem that OpenAI faces is that will be increasingly difficult to come up with GPT-4 and beyond.

- This is because GPT-3 was effectively set loose on the entire Internet (the global repository of all public data) meaning that finding ever-larger sources of data will become increasingly difficult.

- Furthermore, researchers are increasingly finding that the improvements achieved in performance from ever-increasing amounts of data are getting smaller and smaller.

- This tells me that in terms of making machines appear to be intelligent, deep learning techniques are beginning to approach the limit of what they can achieve.

- This is why I think that OpenAI’s approach is the wrong approach to reaching artificial general intelligence and that OpenAI will eventually run out of money and close its doors.

- In the meantime, Microsoft will make hay while the sun shines and is the main beneficiary of OpenAI’s voracious appetite for compute and storage resources.

- That being said, I would not rush out and buy Microsoft’s shares as they have run far beyond what I would call fair value as have almost all of the big tech names.

- This is the danger of a money printing and quantitative-easing fuelled rally because the stock market is currently in the thrall of the Federal Reserve which can’t print money forever.

- When it does finally decide that it can not print and ease more, the market will correct to reality and this will take Microsoft with it.

- Its been a great run but I would be (and am) completely out of this stock now.